JMM

Reference: JavaGuide

Concurrency Issues

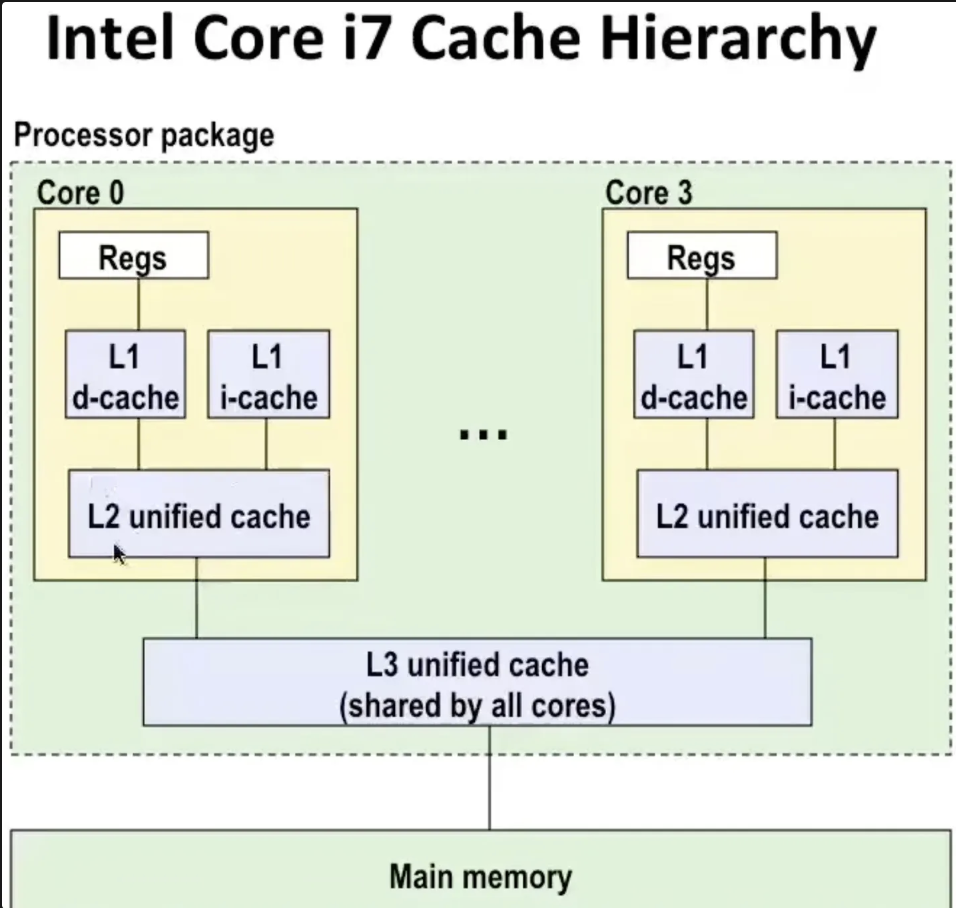

CPU Cache

The value of a variable in the Cache may be inconsistent with the value in Main Memory, leading to calculation errors.

The value of a variable in the Cache may be inconsistent with the value in Main Memory, leading to calculation errors.

Instruction-Level Reordering

Compiler Optimization Reordering

JVM and JIT do this. For Java programs, instruction reordering is performed during compilation without changing its semantics, similar to g1, g2 optimization reordering.

Solution: Prohibit compiler reordering

Instruction Parallel Reordering

CPU does this, related to CPU pipeline. It means a thread can run in parallel on different CPU hardware.

Instruction-level parallelism cannot guarantee safety during multi-threaded concurrent execution.

Solution: Memory barriers

JMM

Java Memory Model.

Why does Java need its own memory model? Because it needs consistent behavior when running on different operating systems, it cannot directly use the OS-provided memory model, so it provides its own.

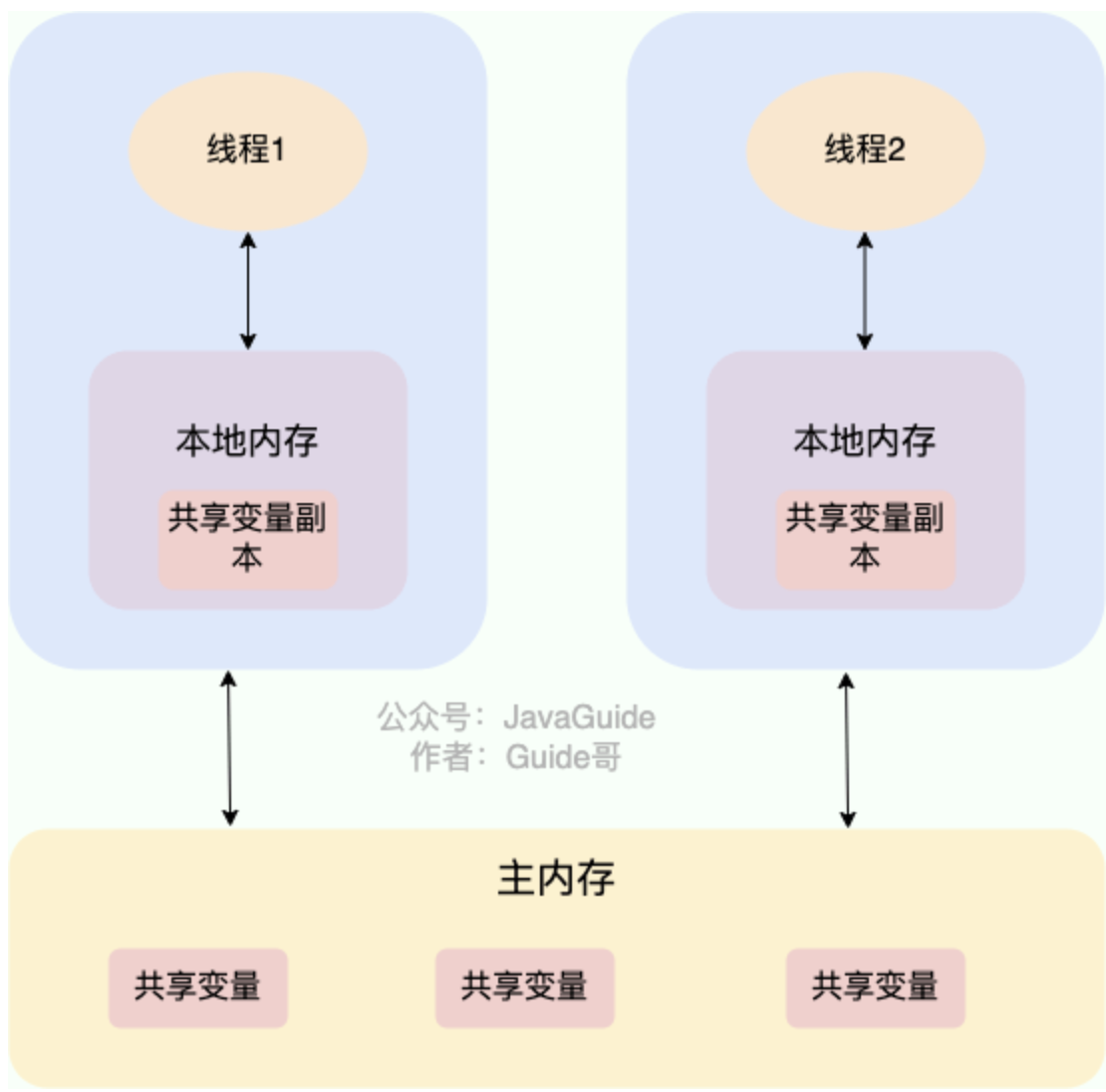

Abstraction

JMM abstracts the concepts of local memory and main memory. All object instances exist in main memory, local memory is exclusive to a thread. One thread cannot access another thread’s local memory.

JMM defines eight synchronization operations (lock, read, load…) and some rules, such as a new variable can only be born in main memory.

JMM defines eight synchronization operations (lock, read, load…) and some rules, such as a new variable can only be born in main memory.

Happens-Before

If one operation happens-before another, the execution result of the first will be visible to the second (regardless of whether they are in the same thread), and the first operation’s execution order precedes the second.

Rules:

- Volatile variable rule: A write to a volatile variable happens-before subsequent reads of that volatile variable.

- Program order rule: Within a thread, operations written earlier in code order happen-before operations written later.

Three Important Characteristics

Atomicity: A group of operations either all succeed or none execute. Visibility: If a thread modifies a shared variable, other threads can immediately see the latest value. Orderliness: Instruction reordering is unsafe in multi-threading; volatile can prohibit reordering.

Volatile

Declaring a variable as volatile tells the JVM that this variable is shared and unstable. It guarantees visibility.

- Every read of this variable must fetch from main memory.

- When reading/writing this variable, insert memory barriers to prohibit instruction reordering.

- Does not guarantee atomicity

Volatile is needed when creating singleton objects

| |

uniqueInstance = new Singleton() is executed in three steps.

- Allocate memory space in the heap

- Initialize uniqueInstance object

- Point uniqueInstance to the allocated memory address

Instruction reordering would execute 1,3,2. If thread A executes 1,3, then switches to thread B which checks if (uniqueInstance == null), it would think uniqueInstance hasn’t been created.

| |

The actual output is less than 2500. Reason: inc++ is not atomic, it’s three steps.

- Read inc value

- Perform +1

- Write back to memory

If thread A reads inc=1, before step 2, switches to thread B which reads inc=1. Both will write 2 back.

Optimistic Lock, Pessimistic Lock

Pessimistic Lock

Locks shared resource read/write. Threads without lock are blocked. Like synchronized, ReentrantLock. Suitable for write-heavy scenarios to avoid retry failures.

Disadvantage: In high concurrency, many blocked threads affect performance.

Optimistic Lock

Checks if shared resource was modified by others when committing changes. Suitable for read-heavy scenarios to avoid frequent locking impacting performance.

Version Number

Thread A reads data, gets version=1; Thread B reads, gets version=1. A modifies and updates to version=2. B tries to update to version=2, but when writing back, compares expected version=1 with current version=2, finds inconsistency, so B’s operation fails.

CAS

Java’s CAS is a native method in Unsafe class, implemented by CPU’s atomic instruction cmpxchg.

| |

To change field at offset in o to update, compare if current value is expected. Returns true if updated, false otherwise.

Atomic Classes

Java has atomic classes that use Unsafe’s atomic operations. Like AtomicInteger for atomic increment.

| |

Unsafe’s getAndAddInt uses spin lock, putting compareAndSwapInt in a while loop; retries on failure.

But constant failure leads to spinning, affecting performance.

ABA Problem

If thread A wants to change val from 1 to 2, reads val=1. Before assigning, thread B changes val to 3 then back to 1. A checks val==1, thinks no change.

Solution: Version number/timestamp.

Synchronized

JVM built-in lock, implemented via object header and lock upgrade mechanism.

synchronized keyword implemented via Monitor mechanism. Each object has an associated monitor lock in JVM. When entering synchronized block, thread tries to acquire the object’s monitor lock. If held by another thread, current thread waits. JVM’s monitor lock is lighter than OS mutex, tries spinning before blocking to reduce context switches, especially on multi-core.

Also ensures modifications to a variable are immediately visible to other threads. Similar to volatile, but volatile is lighter.

- Modifies instance methods (locks the calling object)

- Modifies static methods (locks the current class)

- Modifies code blocks (locks the specified class/object in parentheses)

Underlying Principle

- For code blocks, bytecode has “monitorenter” before and “monitorexit” after. Monitor implemented in C++.

- For methods, bytecode flag has ACC_SYNCHRONIZED, indicating synchronized method.

Lock Upgrade

Mark Word information:

| Lock State | 56 bits | 4 bits | 1 bit (Bias Lock Flag) | 2 bits (Lock Flag) |

|---|---|---|---|---|

| No Lock | Object Hash Code | Generation Age | 0 | 01 |

| Biased Lock | Thread ID + Epoch | Generation Age | 1 | 01 |

| Lightweight Lock | Pointer to Lock Record | - | - | 00 |

| Heavyweight Lock | Pointer to Monitor | - | - | 10 |

| GC Mark | - | - | - | 11 |

- No Lock: Mark word records object’s hash code, generation info

- Biased Lock: Only one thread needs the lock. Writes current thread ID to mark word via CAS. If same thread wants lock again, gets it directly, so reentrant.

- Lightweight Lock: Multiple threads alternate access. When another thread wants access, sees lock biased to other, upgrades to lightweight. Creates lock record in thread’s stack. Mark word becomes pointer to lock record, which has original mark word + pointer to lock object. Thread spins to acquire (user space).

- Heavyweight Lock: Multiple threads concurrent access.

ReentrantLock

Differences from synchronized:

- Interruptible (demo) Call lock.lockInterruptibly(), other threads can interrupt it if can’t acquire lock.

- Set timeout (if can’t acquire in time, give up, lock.tryLock(2, TimeUnit.SECONDS))

- Set as fair lock (threads acquire in order applied, FIFO). But ensuring FIFO causes frequent switches, impacts performance.

- Supports multiple condition variables (waiting threads awakened by different conditions)

- synchronized is JVM implemented, can’t see underlying, but ReentrantLock is JDK API, called via lock(), unlock().

Reentrancy

Both synchronized and ReentrantLock are reentrant. Same thread can acquire lock it already holds.

Condition Variables

After acquiring lock, if thread not ready to run logic, calls condition1.await() to wait for condition1, adds itself to condition1’s wait queue.

Another thread performs operation making condition1 true, calls condition1.signal() to wake one waiting thread; condition1.signalAll() wakes all.

Difference from synchronized: synchronized has only one condition, so signalAll() wakes all waiting threads.